Overview

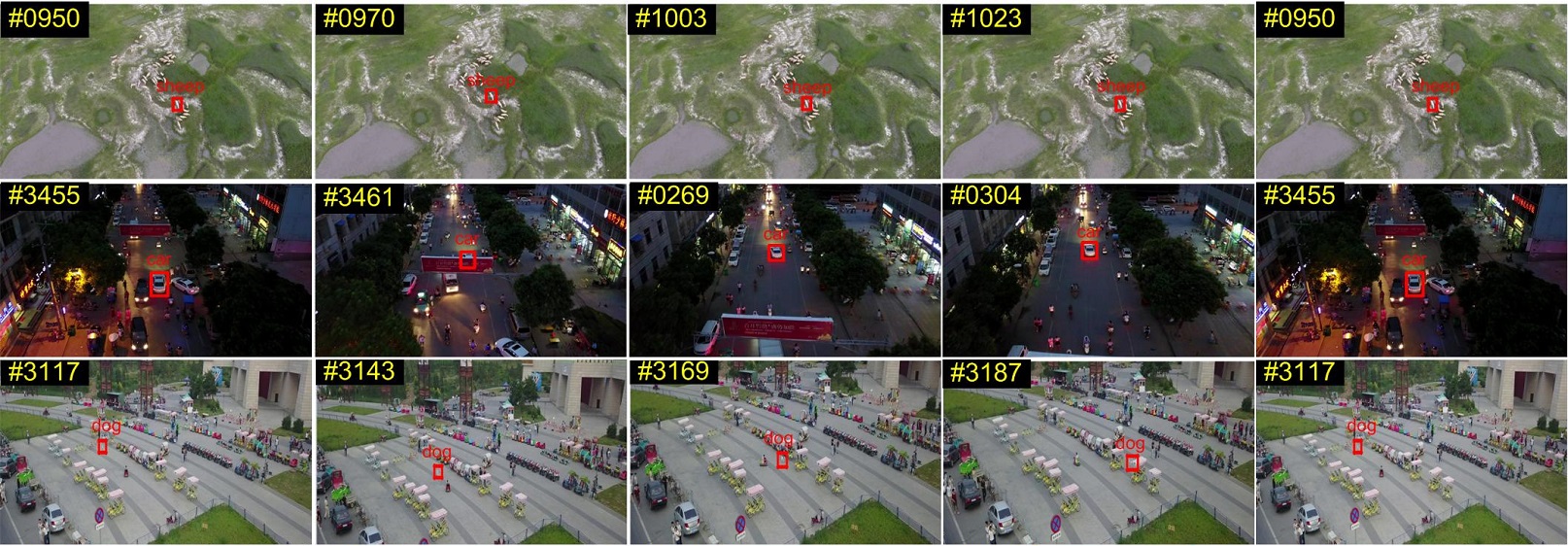

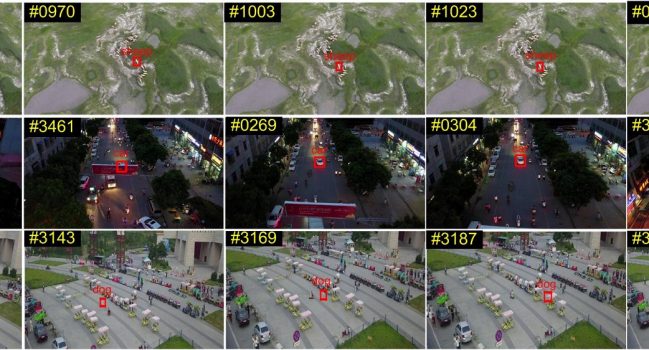

- Given an input video sequence, multi-object tracking aims to recover the trajectories of objects in the video. The challenge will provide 96 challenging sequences, including 56 video sequences for training (24,201 frames in total), 7 sequences for validation (2,819 frames in total) and 33 sequences for testing (12,968 frames in total), which are available on the download page. We manually annotate the bounding boxes of different categories of objects in each video frame.

- In addition, we also provide two kinds of useful annotations, i.e., occlusion ratio and truncation ratio. Specifically, we use the fraction of objects being occluded to define the occlusion ratio. For truncation ratio, it is used to indicate the degree of object parts appears outside a frame. If an object is not fully captured within a frame, we annotate the bounding box across the frame boundary and estimate the truncation ratio based on the region outside the image. It is worth mentioning that a target is skipped during evaluation if its truncation ratio is larger than 50%. Annotations on the training and validation sets are publicly available.

Dates

- [05.15]: Training, validation and testing data, and evaluation software released

- [07.15]: Result submission deadline

- [08.28]: Challenge results released

- [08.28]: Winner presents at ECCV 2020 Workshop

Challenge Guidelines

- The multi-object tracking evaluation page lists detailed information regarding how submissions will be scored. To limit overfitting while providing researchers more flexibility to test their algorithms, we have divided the test set into two splits, including test-challenge and test-dev.

- Test-dev (17 video clips) is designed for debugging and validation experiments and allows for unlimited submission. The up-to-date results of the test-dev set are available to view on the leaderboard of multi-object tracking.

- Test-challenge (16 video clips) is used for workshop competition, and the results will be announced during the ECCV 2020 Vision Meets Drone: A Challenge workshop. We encourage the participants to use the provided training data, while also allow them to use additional training data. The use of external data must be indicated during submission.

- The train+val video clips and corresponding annotations as well as the video clips in the test-challenge set are available on the download page. Before participating, every user is required to create an account using an institutional email address. If you have any problem in registration, please contact us. After registration, the users should submit the results in their accounts. The submitted results will be evaluated according to the rules described on the evaluation page. Please refer to the evaluation page for a detailed explanation.

Tools and Instructions

We provide extensive API support for the VisDrone images, annotation and evaluation code. Please visit our GitHub repository to download the VisDrone API. For additional questions, please find the answers here or contact us.