Object Detection Evaluation

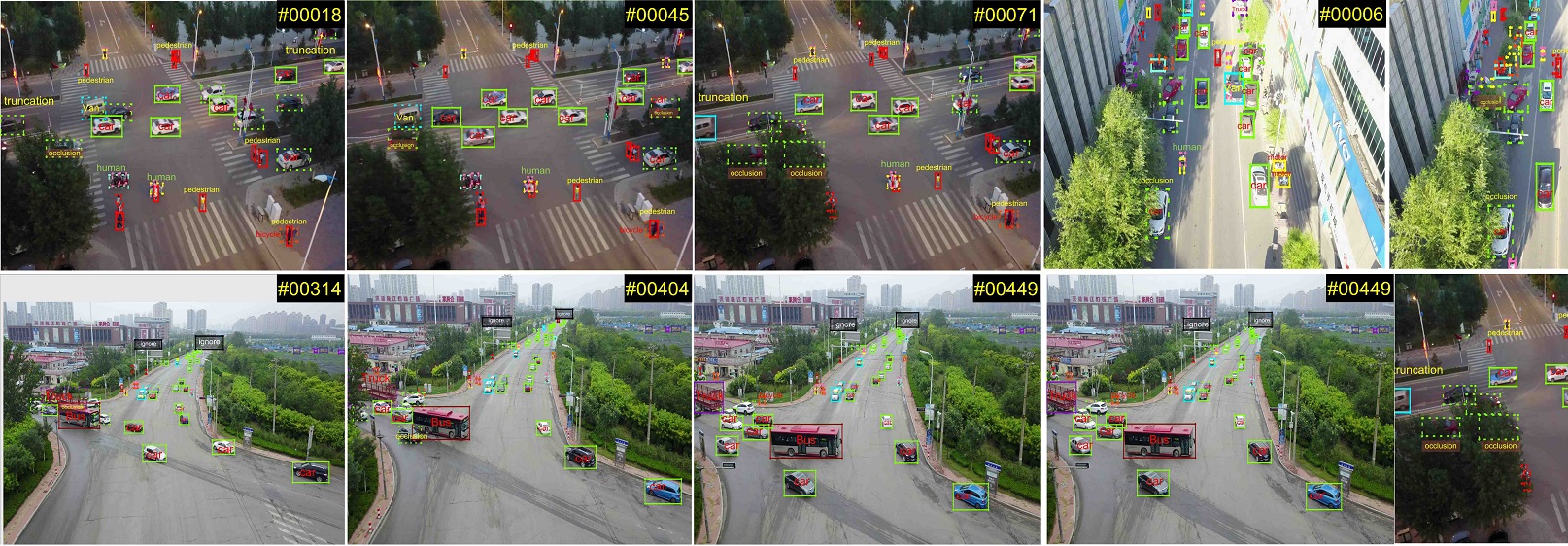

For Task 1 (i.e., object detection in images), we mainly focus on human and vehicles in our daily life, and define ten object categories of interest including pedestrian, person, car, van, bus, truck, motor, bicycle, awning-tricycle, and tricycle. Notably, if a human maintains standing pose or walking, we classify it as pedestrian; otherwise, it is classified as a person. Some rarely occurring specific objects (e.g., machineshop truck, forklift truck, and tanker) are ignored in evaluation. Meanwhile, if the truncation ratio of the object is larger than 50%, it is skipped during evaluation. To obtain results on the VisDrone2020 test-challenge set, the participators must generate the results in default format (see here) and upload to the evaluation server.

We require each evaluated algorithm to output a list of detected bounding boxes with confidence scores for each test image in the predefined format. Please see the results format for more detail. Similar to the evaluation protocol in MS COCO [1], we use AP, APIOU=0.50, APIOU=0.75, ARmax=1, ARmax=10, ARmax=100, and ARmax=500 metrics to evaluate the results of detection algorithms. Unless otherwise specified, the AP and AR metrics are averaged over multiple intersection over union (IoU) values. Specifically, we use ten IoU thresholds of [0.50:0.05:0.95]. All metrics are computed allowing for at most 500 top-scoring detections per image (across all categories). These criteria penalize missing detection of objects as well as duplicate detections (two detection results for the same object instance). The AP metric is used as the primary metric for ranking the algorithms. The metrics are described in the following table.

| Measure | Perfect | Description |

| AP | 100% | The average precision over all 10 IoU thresholds (i.e., [0.5:0.05:0.95]) of all object categories |

| APIOU=0.50 | 100% | The average precision over all object categories when the IoU overlap with ground truth is larger than 0.50 |

| APIOU=0.75 | 100% | The average precision over all object categories when the IoU overlap with ground truth is larger than 0.75 |

| ARmax=1 | 100% | The maximum recall given 1 detection per image |

| ARmax=10 | 100% | The maximum recall given 10 detections per image |

| ARmax=100 | 100% | The maximum recall given 100 detections per image |

| ARmax=500 | 100% | The maximum recall given 500 detections per image |

The above metrics are calculated over ten object categories of interest. For comprehensive evaluation, we will report the performance of each object category. The evaluation code for object detection in images is available on the VisDrone github.

References:

[1] T. Lin, M. Maire, S. J. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollar, and C. L. Zitnick, “Microsoft COCO: common objects in context,” in Proceedings of European Conference on Computer Vision, 2014, pp. 740–755